At nomtek, we’re continuously looking for exciting ways to combine different technologies to build interesting products. This time, we used AI, AR, and mobile to create an experimental Texas Holdem Poker AR app that assists players.

NOTE: The project is for experimental and educational purposes only. We don’t support gambling, as it carries a host of negative consequences for individuals and society.

Step #1: Main assumption

The main assumption was that the player will first set up the game state by using the mobile app:

- setting up how many players are playing

- who’s the dealer at the start

The app will be connected with AR glasses — they will be our system’s “eyes.” The app will then prompt AI asking for advice and a chance of winning.

The AR glasses in question are Lenovo ThinkReality A3 with a Motorola Edge+ supported by Snapdragon Spaces Dev Kit. We’ve been working with Snapdragon Spaces for over a year as participants in the Snapdragon Pathfinder program and contest (with our augmented reality StickiesXR app for Figma). Snapdragon Spaces features Dual Rendering Fusion for Unity SDK that adds additional functionality to mobile applications, letting them view 3D content on AR glasses.

Step #2: First iteration with only ChatGPT and why it didn’t quite work

At first we wanted to tackle the problem as easiest as we could. After creating the initial app and connecting it with the glasses, the app started to send prompts with the image of the current game state asking about the winning chance.

The first obstacle was of course the limitations of the openAI software, that avoids answering questions that might be harmful in any way, but with a little bit of prompt tuning it started to give us the answers we needed.

It’s worth noting that “for educational purpose” and “rounding up to the nearest ~10%” are important as the first one omits the Chat’s filter for gambling, and the second one helps when ChatGPT answers that it can’t calculate the exact chances.

What was wrong then? It seems like openAI’s software had a few problems recognizing the cards on the table based on an image. Our first thought was, Hey, there are pretty nice options to classify images without the help of the GenAI, so this might be a good opportunity to preprocess the data instead of asking for everything in one go, right?

We can also use a smaller embedded neural network model to do this job for us on the device itself. The answer here was YOLO (real-time object detection).

Step #3: Second iteration with embedded NN + ChatGPT

So we needed a YOLO model that would work on mobile devices. The YOLO itself isn’t trained to classify cards, so we also had to tune it up with additional training data. Surprisingly the good datasets were pretty easy to find, so we didn’t need to gather or generate the data by ourselves.

We used the dataset found on roboflow.

It consists of:

- 21210 training images

- 2020 validation images

- 1010 test images

The dataset also had some preprocessing and augmentations applied that might benefit the training itself and it was already assembled in a way that YoloV8 could be used out of the box.

The next step was choosing the platform we wanted to train this on. The first bet was Kaggle as it is pretty easy to use, has 30 hours of free usage per week, and everything else we needed. We quickly developed a notebook that was ready to take the task of training the YoloV8n model.

Step #4: Training YoloV8 and exporting it to one of the mobile-friendly models

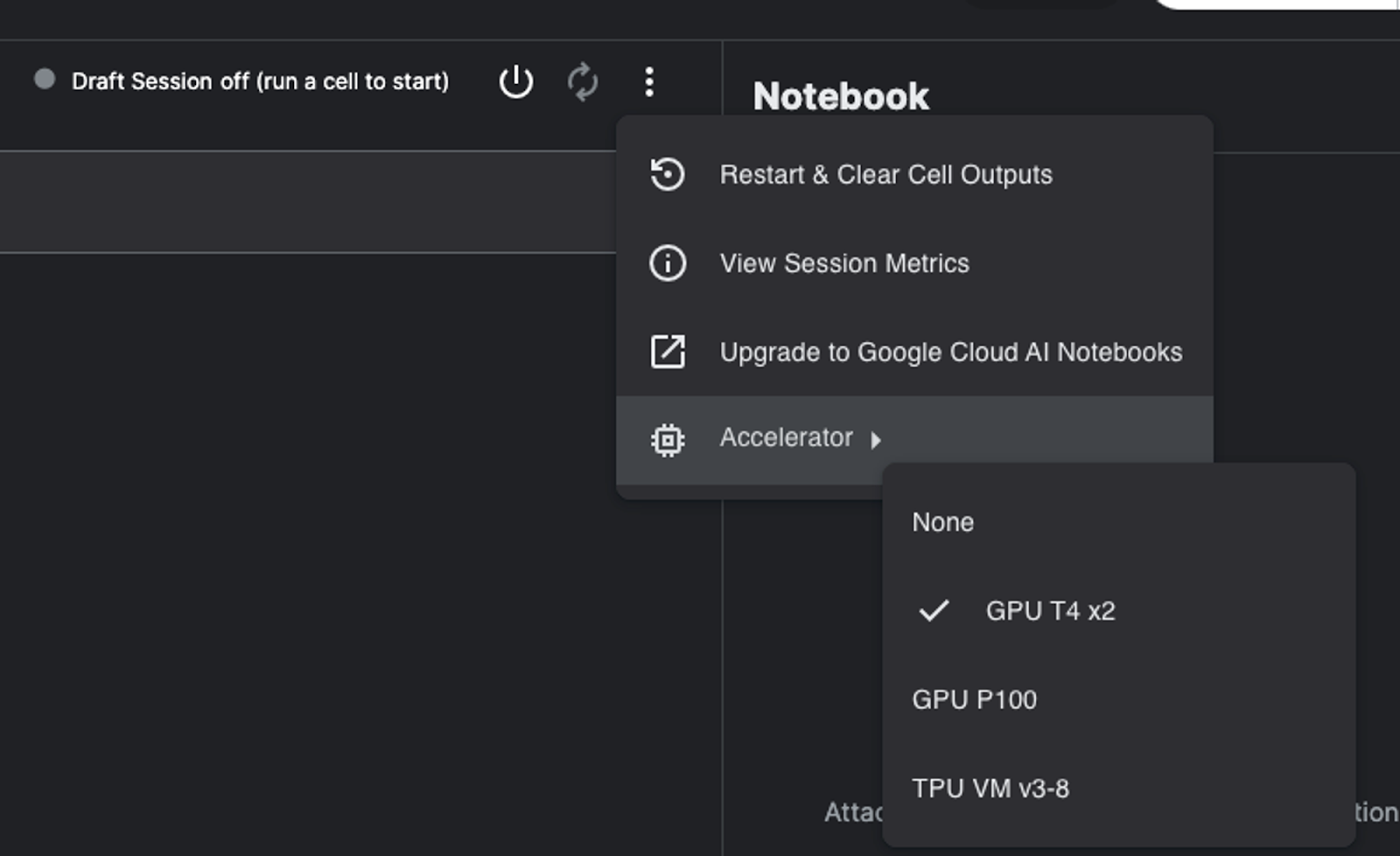

First we had to enable GPU (used T4 GPU available on Kaggle for free), as it greatly reduced the train time for each epoch.

Ultralytics YOLOv8 is a cutting-edge, state-of-the-art (SOTA) model that builds upon the success of previous YOLO versions and introduces new features and improvements to further boost performance and flexibility.

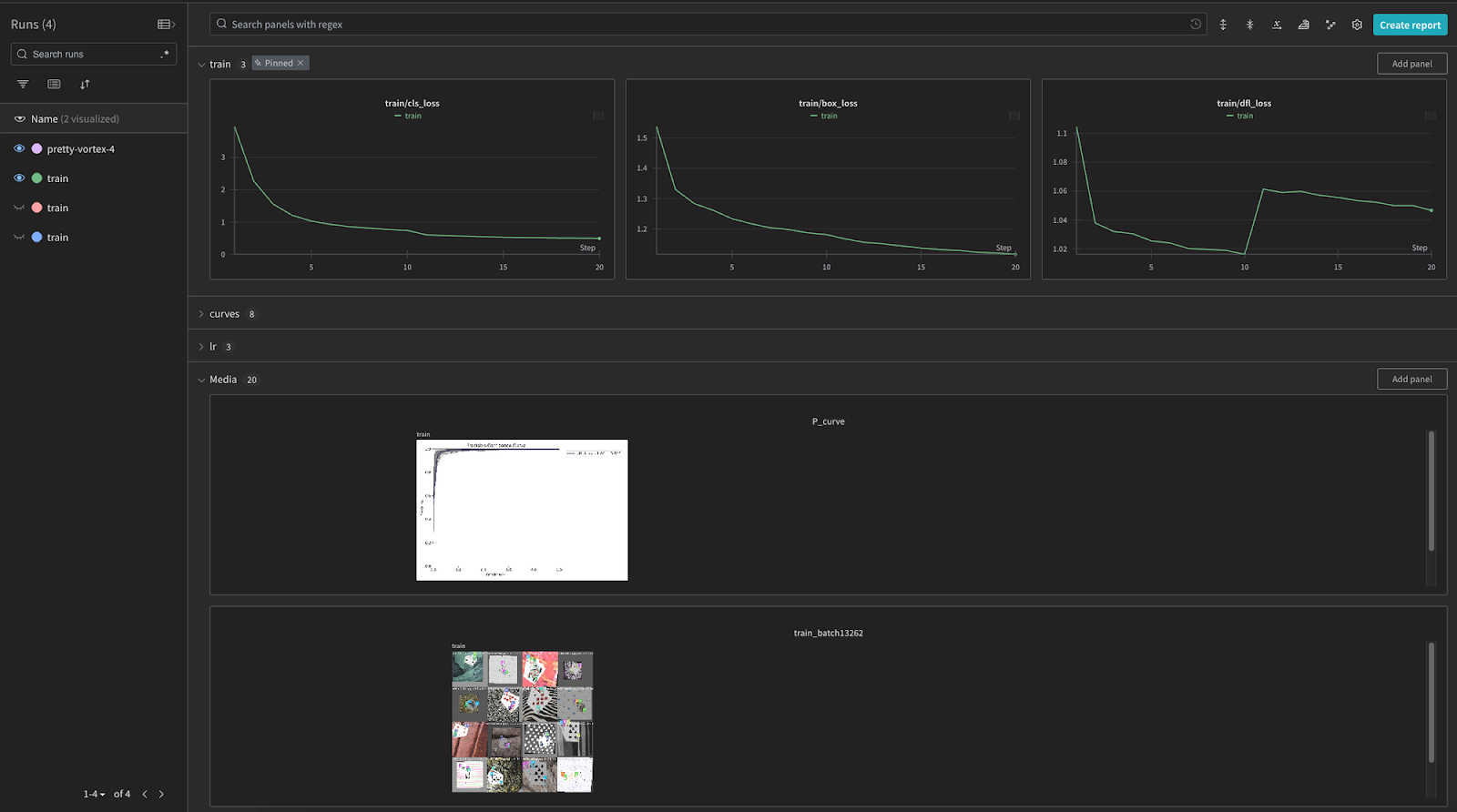

Wandb (Weight & Biases) is a MLOps platform that provides a lot of useful information for any AI dev.

Then we download the dataset directly to Kaggle, you can always do it manually by uploading the data but this is way easier.

This part of code can be generated on the Roboflow page after we choose to use one of its datasets.

After successfully downloading the dataset it should be available in the Playing-Cards-4 folder.

The last step is to run training with the plugged in W&B platform. This might take a while (like a few hours even, depends on how many epochs are set):

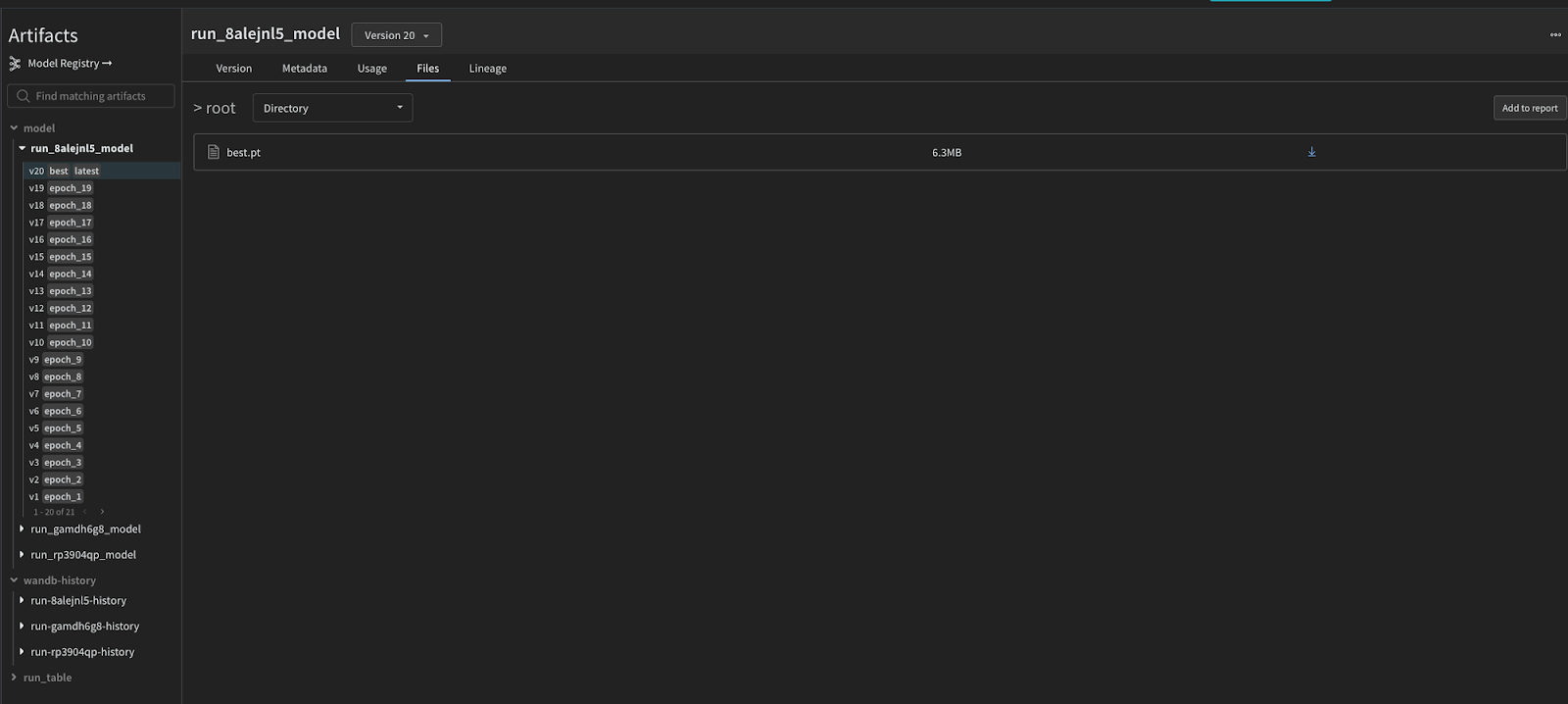

There is only one line of code to integrate the W&B. Besides information about training it also hosts each of our weights for every epoch on the platform, and the first 100GB of storage is available for free.

The best thing is, even if something happens with the Kaggle platform, you can still obtain your weights from the W&B dashboard.

We can also verify if the parameters are optimal in our case by analyzing the cards on the W&B dashboards, like class or box loss.

Let’s check if the model is working by doing a simple inference on the example image:

It should print out the result:

image 1/1 /path_to_file/images_with_cards.jpg: 640x640 1 4H, 1 6D, 2 QDs, 76.3ms Speed: 1.7ms preprocess, 76.3ms inference, 486.3ms postprocess per image at shape (1, 3, 640, 640)

As you can see, the model found 1x 4H, 1x 6D, and 2x QDs. The model is pretty much looking for the corner of the card to identify it, and the queen has two of them visible, hence the two matches for it.

Great! Seems like it is working.

Now we need to export it to a format that will be mobile friendly. There are a few possibilities here. We can use the TFlite, torchscript, or onnx on mobile. We decided to go with the onnx as it was easier to plug it in with our app that is written in Unity. Again, it’s only a one-liner:

We can then use it in Unity with a help of the Unity Sentis package with just a few lines of code:

Step #5: Model tests on mobile device

So the model is ready for use. The next steps will be preparing the input, running inference, and interpreting the output from our model.

Input preparation

The input preparation strongly depends on the use case. We have possibilities to resize, crop, rotate or enhance the bitmap by using some of the image processing tools. In our case the image resolution needs to be 640x640. For now let’s omit any other pre-processing steps as it might not be necessary. A few lines of code and our input tensor is ready!

The Resize function looks like this:

We first create a temporary render texture, set it as active, copy the texture data on GPU using Blit function, then ReadPixels from the temporary/active RenderTexture into the texture with given width/height, finally applying changed pixels to the GPU.

Don’t forget to release your temporary render texture! 🙂

Integrating model and running inference

We simply create a worker that will execute the inference on our model. Another few lines and viola!

Interpreting output

For simplicity, we have created an enum that will represent each of our cards. Remember, the data.yaml file from the training process? This enum needs to have its value in the same order as names were in the data.yaml file. You can use any structure you want or even read those from the file directly, but the crucial part is to keep ordering the same.

Our structure is ready, and we have the output tensor with a shape of [1,56,8400]. To map this tensor into a more human readable value we need to know how to interpret it.

1represents the batch size56first 4 values are bounding box attributes and 52 for the number of classes (types of cards). The bigger value for each class, the bigger chance that an item of a given class is inside the bounding box8400is the flattened grid cells count, which comes from the height and width of the feature map times the number of anchors per cell

So we basically can tell that for each image cell, we have 56 values which represent the bounding box and each class value.

The given code will basically iterate over each class value for each cell and save the biggest value if it’s above the given threshold of 0.4:

How the YoloV8 model helped the app

- Using on-device AI, specifically Yolo, to detect cards helps us decrease latency and improve quality of recognition. Using CNN is better when we have a well defined problem like card recognition.

- Besides that, having a solution based on on-device AI increases privacy — we don't need to send photos of our room to 3rd party servers.

Why ChatGPT instead of poker odds algorithm?

There are already good ways of getting the winning probabilities in poker card games. We can use Monte Carlo simulation or even brute force every possibility nowadays. So why ChatGPT?

The main reason was to challenge ourselves to create an app that will leverage embedded models and a big LLM like chatGPT at the same time. Even if in this case the problem seemed trivial, we can very easily transfer this knowledge to another domain to solve more sophisticated problems.

Additional Steps: Preprocessing

The code covered before works just fine if the input is “square enough” and resizing it to 640x640 doesn’t distort it too much. In our case, the camera feed from the AR glasses has dimensions 1280x720, so the width is almost double the height. When testing the model, it wasn’t able to find any cards in this scenario.

To fix this problem, we came up with a very simple solution: because 1280 is just 640 x 2, we can crop the image into two 640x720 parts and then resize both of them and put them into our model independently.

After that, all we have to do is concatenate the found cards from both parts.

The End Product and Its Performance

The Texas Holdem Poker AR app was a great adventure where many distinct parts had to work together:

- LLM integration

- on-device model integration

- Unity integration

- AR glasses connection

The final product recognizes cards most of the time and gives pretty good estimations of the player’s chances to win. While Unity does a fine job connecting all parts of our app together, there are many variables that affect the quality of these parts and thus determine how accurate the results can be.

- how well the embedded recognizes cards

- what’s the quality of images provided by the camera in AR glasses

- what are the capabilities of the LLM that we ask for chances estimation

In our case we struggled to make chatGPT give reliable and accurate responses for most of our development process. But during the last sprint when the ChatGPT 4o was released, it immediately solved our problems with that part of the application.

Related articles

Supporting companies in becoming category leaders. We deliver full-cycle solutions for businesses of all sizes.